Exercises

To run the exercise notebooks, download them first: Open the notebook on this website, then click on “Other formats” \(\rightarrow\) “Jupyter” on the right side of the page.

Generative neural networks

Here you can download example notebooks related to creating your own generative neural network architectures.

Normalizing flow

- Notebook here

In this exercise, you will build a normalizing flow based on affine coupling from scratch using keras, that will learn to transform the moons distribution into a standard normal.

Flow matching - Datasaurus

In this exercise, you will build a flow matching model using keras that transports a standard normal distribution into a distribution based on the datasaurus.

Flow matching - mirroring the Swiss roll

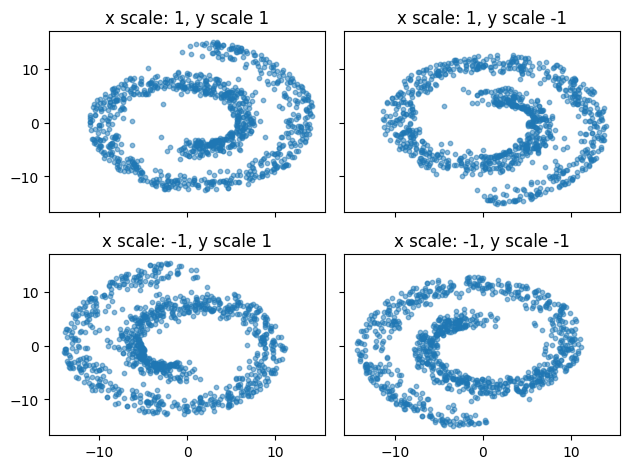

In this exercise, you will expand the flow matching model so that you can condition the distribution on contextual variables. This will enable you to learn a flow that transports a doghnut distribution into the swiss roll distribution, mirrored along horizontal and vertical axes, depending on the context.

BayesFlow

Please visit the BayesFlow repository to find a bunch of examples that can help you with BayesFlow. In addition, below are two exercise notebooks you can use to familiarize yourself with BayesFlow.

Estimating parameters of a normal distribution

- Notebook here

This notebook provides you with the very basics of the BayesFlow workflow - starting with defining simulators, through defining and training the neural approximators, and ending with network validation and inference.

Wald response times, Racing diffusion model

This notebook provides you with a basic application of BayesFlow in the context of models of decision making - the Wald model of simple response times, and the racing diffusion model.

Racing diffusion model with covariate on decision threshold

In this notebook (courtesy of Leendert van Maanen), you can fit a Racing diffusion model where the decision threshold decreases as a function of trial number, modeling behavior of a participant who is becoming more and more impatient the longer the experiment keeps going.